Elon Musk recently went on the record with what might be his most ridiculous prediction yet:

— Elon Musk (@elonmusk) May 30, 2022 “2029 feels like a pivotal year. I’d be surprised if we don’t have AGI by then. Hopefully, people on Mars too.” To the layperson unfamiliar with computer science, this probably just seems like a really smart person being optimistic. But to most people who understand the basics of AI, this is the kind of statement you’d expect from a mainstream journalist with a limited understanding of the topic. I’m not sure there’s a single serious person working in the field of AI (aside from investors) who’d co-sign Musk’s belief — no offense meant to the abstract dreamers out there. In fact, a coalition of experts has been formed with the sole intent of asking the world’s richest blusterer to put his money where his mouth is.

— Gary Marcus 🇺🇦 (@GaryMarcus) May 30, 2022

Betting against the world’s richest person

Artificial intelligence luminary and co-author of the best-selling book “Rebooting AI,” Gary Marcus, originally laid the challenge down. Marcus, an NYU professor who’s made his bones debating the likes of Yoshua Bengio and Yann LeCun (two people who are absolute rock stars in the field of machine learning), first offered Musk a $100,000 wager. The terms, per a Substack post from Marcus, are as follows:

In 2029, AI will not be able to watch a movie and tell you accurately what is going on (what I called the comprehension challenge in The New Yorker, in 2014). Who are the characters? What are their conflicts and motivations? etc. In 2029, AI will not be able to read a novel and reliably answer questions about plot, character, conflicts, motivations, etc. Key will be going beyond the literal text, as Davis and I explain in Rebooting AI. In 2029, AI will not be able to work as a competent cook in an arbitrary kitchen (extending Steve Wozniak’s cup of coffee benchmark). In 2029, AI will not be able to reliably construct bug-free code of more than 10,000 lines from natural language specification or by interactions with a non-expert user. [Gluing together code from existing libraries doesn’t count.] In 2029, AI will not be able to take arbitrary proofs from the mathematical literature written in natural language and convert them into a symbolic form suitable for symbolic verification.

Here’s the thing folks, Marcus (in my opinion) isn’t trying to make a buck off Musk and it sure doesn’t look like he’s trying to drum up controversy to sell something. He, like many other AI experts, appears to be concerned with the negative effects Musk’s rhetoric could have on the rest of the field. AI isn’t magic; it’s science. And it’s generally impossible to inform the general public on the actual state of AI technologies (my job, here at TNW’s Neural vertical), when people like Musk are out there making claims so outlandish, they fall into fantasy fan-fiction territory.

We’ve been here before

Remember when Musk declared driverless cars were just around the corner — that they’d be here within two years? Then he said they’d be here the next year. And the next. And the one after that. Here’s Musk promising driverless cars in 2014 (and every year after that!): And then there’s Hyperloop. Musk was going to dig tunnels all over the world, fill them with magnetic levitation technology, and ferry passengers underground in specialized autonomous vehicles capable of shooting through the Boring Company’s tubes faster than a Boeing Jet. Unfortunately, nobody bothered to tell Musk that maglev tech, as he envisions it, doesn’t exist. What did we get? A tunnel where 1-3 people at a time can get into a Tesla car, driven by a Tesla employee, that slowly meanders its way through a crowded tube where cars are queued up due to traffic. It’s been called a deathtrap. In fact, it seems like every single one of Elon Musk’s endeavors, with the exception of maybe PayPal and SpaceX, are seemingly built on a bedrock of bullshit. Neuralink? The company Musk said was going to “cure autism?” Nevermind that thousands of scientists, technologists, and engineers have dedicated their entire lives over the past century to developing brain implants to treat medical conditions (and that autistic people don’t need to be “cured,” because autism isn’t a disease). And that brings us to the pinnacle of cow manure-based technology that is the Tesla “Optimus” robot.

Robotics are super easy, wink-wink

Optimus was announced last year as a Tesla project to create a humanoid robot capable of doing boring and dangerous chores humans don’t want to. The above video demonstrates how much progress Tesla made on the project before the announcement: exactly zero. What you see in that video is a human wearing spandex. Impressed? Now, we’re to believe that the company will have a functioning prototype to show off by September:

— Elon Musk (@elonmusk) June 3, 2022 I’ll go on the record right now: there’s absolutely no way Tesla demonstrates a humanoid robot capable of anything more than MIT-level feats of robotics in September. That’s not an insult to MIT’s robotics lab. It’s a jab at anyone who thinks building robots is easy. And it’s a 15-minutes-long belly-laugh in the face of anyone who believes Tesla can show off a machine that, in any way, convinces the AI community it’ll be capable of safely operating autonomously in unmapped home spaces by 2029. But nobody gives a crap what I think. Elon Musk is the richest man alive and I’m just a journalist who should learn to code. I asked Gary Marcus what he thought about the upcoming Tesla AI Day, and whether Optimus was going to be ready for primetime by then. He seemed a bit skeptical too:

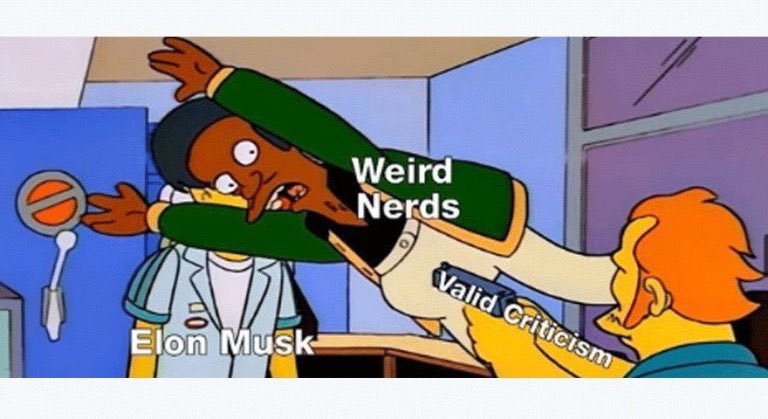

The full $500K bet

To that end, a coalition of sanity-speakers has sprung up around Marcus. Here’s a snippet from an article that was just published on Fortune today by Marcus and Vivek Wadhwa, an AI expert from Carnegie Mellon: There are dozens of incredibly difficult problems to be solved, ranging from the control of physical motion, to the language understanding in deciding what people want, to the planning in deciding how to do whatever is wanted safely and efficiently. But I also note that we are still waiting on Tesla’s autonomous taxi fleets. General-purpose robots are in many ways even more challenging, because the variety of human experience is so vast. We’ve all seen the cool videos of dancing robots from Boston Dynamics; building truly general purpose humanoid robots at a scale, with all the AI that would require, would be something else again. I don’t see it happening this decade. That kind of money seems like a lot to a lowly writer like myself, but Elon Musk could blow $500K a day for the rest of his life and still have a fortune left over. Within a couple hours there was a flurry on Twitter and Marcus’s substack had close to 10,000 views, and soon other experts in the field also offered their support for the wager, increasing the pool to $500,000. But not a word from Musk. Then writer and futurist Kevin Kelly, who co-founded the Long Now Foundation, offered to host the bet on his website side by side with an earlier, and related bet that Ray Kurzweil made with Mitch Kapor. So what’s the hold up Musk? It’s virtually impossible to imagine that you haven’t heard about the bet. For someone who spends a significant amount of their time trolling people on Twitter and trashing the media, you’ve always been incredibly sheepish when it comes to debating (or even disputing) the words of anyone who even slightly appears to know what they’re talking about in the field of AI. That, dear readers, is why I’m declaring Musk the official “CEO” of tech: the Chicken Executive Officer. Cluck cluck, Musk fans. Cue the memes.